Both business and technology executives overwhelmingly believe that data is one of their most critical strategic assets. And yet, according to the same report by Capgemini, only 20% of executives trust their data.

Poor data quality was the primary reason behind this lack of trust, with Capgemini finding that less than a third (27%) of executives were happy with their data quality. This included everything from concerns with data sourcing to worries about data veracity.

Data is the driving force behind a successful business, shaping decision-making and enabling innovation. So, when data quality slips, the consequences can be serious: inaccurate insights, wasted resources, lost revenue or even hefty fines, should things go wrong. Poor-quality data can also create a cycle of errors that ultimately hurts the bottom line and hinders growth.

That’s why more and more executives are looking for smarter ways to manage their most precious business resource, seeking data management solutions that will offer them an accurate and unified view of their organisation’s data pipelines and systems. This harmonised view of data is known as a ‘golden source’.

What is a golden source of data?

A golden source of data is the single, authoritative source of data within an organisation. It represents the most accurate and complete version of a company’s data across all its different pipelines and provides a reliable, trustworthy reference point that a business can use to build insights, improve operational efficiency and enhance customer experiences.

There are many different terms that encapsulate this concept, including a single source of truth, a golden record or a master dataset. Regardless of what you call it, it’s important to remember that an organisation’s golden source of data will never be static. It is a dynamic record that reflects the most up-to-date and accurate information available. This is why creating a golden source requires sophisticated, well-maintained data management and data governance strategies.

What would a golden source look like?

According to the UK Data Management Association, there are six core pillars of data quality: accuracy, consistency, completeness, timeliness, uniqueness, and validity. Each pillar is essential for ensuring that the data a business is using is robust, reliable and ready to use.

Let’s take a closer look at what these would mean in practice:

Accurate: accuracy refers to data which properly represents real-world facts, values, conditions or events. For example, is telephone number in your database the same as your client’s actual number? Is the sale price written down the same as what a client sold their asset for? If data is inaccurate, any decisions, actions or insights based on it will be flawed.

Consistent: consistency refers to the extent to which datasets are compatible and uniform across different systems and against other sets of data. One example would be to ensure that all the dates in your database are entered in a DD/MM/YY format, rather than a MM/DD/YY format. When data is inconsistent, it can lead to misinterpretation and inaccurate insights.

Complete: this describes to whether a dataset contains all the necessary information you should have, without gaps or missing values. Incomplete data could skew analysis, lead to errors or mean you are unable to complete certain business processes. The more complete a dataset, the more comprehensive your analysis will be and the more informed your decision-making will be.

Timely: this refers to whether a dataset is up to date and available when needed. This doesn’t necessarily mean that every business requires real-time data, but rather that the time lag between collection and availability is appropriate for the business’s intended use. For example, historic stock prices might be fine for weekly trend analysis but not for active trading.

Unique: This refers to the absence of duplicate records from a dataset, meaning each piece of data is different from the rest. Duplicate data can lead to distorted analysis, inaccurate reporting or confusion in your operations. For example, if a client is duplicated in your system, you might charge them twice for the same transaction, leading to negative client experiences and reducing trust in your business.

Valid: This refers to data that conforms to an expected format, type, or range. This is critical in ensuring that data can be processed, interpreted accurately and used effectively across different operations. An obvious example of valid data input would be UK postcodes as they should always consistent of five to seven characters. If the postcode doesn’t conform to this standard, then it is not valid.

What are the benefits of having a golden source of data?

When an organisation establishes a golden source of data, it can help them make better, data-driven decisions, improve their operational efficiency and maintain a high-quality customer experience. This is because, by having a single, authoritative and unified view of their data universe, businesses can be more confident in the accuracy, quality and value of their outputs.

Relying on faulty output can be a massive corporate risk. It can lead to poor customer outcomes, regulatory sanction and jeopardised business reputation. In fact, according to research and consulting firm Gartner, poor data quality can cost organization an average of $12.9 million (around £9.9 million) every single year.

High data quality is also essential to fully exploit the possibilities of artificial intelligence (AI). As the old saying goes: Garbage In, Garbage Out. Namely, if the base data is disordered, incomplete, out of date or otherwise inaccurate, AI can hardly be blamed for spawning inaccurate answers.

Is a golden source of data achievable?

Establishing a golden source of data is not an easy task for any organisation. Doing so requires a lot of work: businesses must work to eliminate silos across their systems and data pipelines, identify and resolve any inconsistencies or redundancies within their dataset, and find a way effective and efficient way to consolidate all their data in a single, harmonised view.

It can be a time-consuming and resource-intensive process — and that’s only the beginning. One study, conducted by Harvard Business Review, found that, on average, 47% of newly created data records within businesses have at least one work-impacting error. This is a concerning statistic when we consider that, on average, data volumes are growing by around 63% a month within organisation, according to data professionals. So, once a golden source of data has been finally established within an organisation, the business must work even harder to maintain it and ensure it remains accurate and up to date.

How Raw Knowledge can help businesses establish their golden source

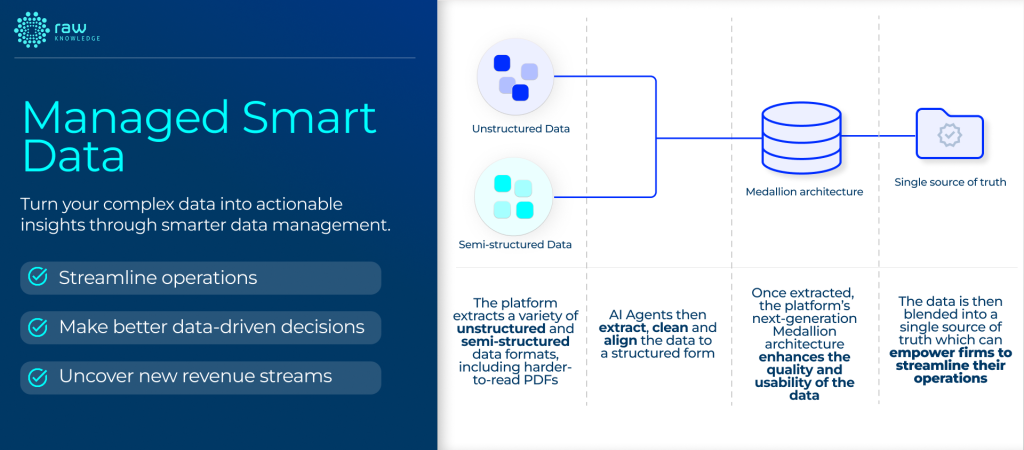

No matter what form your organisation’s data takes or where it comes from, Raw Knowledge’s pioneering Managed Smart Data platform can help create a single, unified and traceable view of your disparate data sources so you can streamline and scale your business, make better data-driven decisions, and meet regulatory requirements with ease.

The platform can bring together different data sources within its lakehouse architecture, without the need to have any predefined output formats in mind. It will then enhance the quality and usability of this ingested data using its three-layer design.

The first, bronze layer allows data to be stored in its original form and for any changes to the data to be recorded automatically. This provides a fully traceable and granular view of an organisation’s data which can help them understand the journey of their data and how it has changed over time.

The second, silver layer cleans and validates an organisation’s data, ensuring that exceptions, issues and errors are identified and corrected before they can move down stream.

The final, gold layer will then blend these diverse data sources it into a single, harmonised view that is ready and optimised for business intelligence, reporting and decision-making. By providing access to a clean, unified view of an organisation’s data, the MSD Platform helps eliminate the need for multiple systems, reduce costs, and improve business agility.